Why more AI models in one app? #

LokAi uses a local AI model. I chose a model that is powerful enough to run on common hardware, but there are still machines where it can be too slow. Especially, waiting for the first answer can take almost a minute or more on slower computers. Therefore, the default model LokAi uses after a fresh installation is a small, fast, and less sophisticated model compared to the larger one. However, it can still be used for text generation and some less complex tasks.

Switching models #

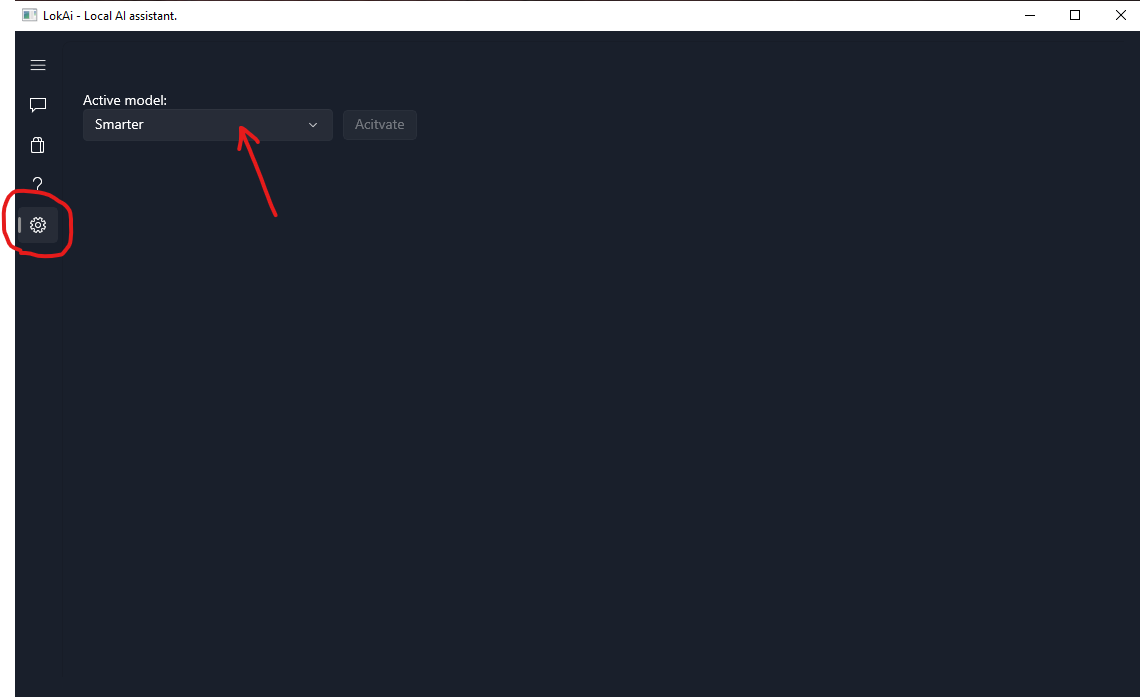

You can switch to the larger model in the Settings screen. Your model selection is remembered, so you need to switch it once. Here is how you do it:

- Go to the Settings screen:

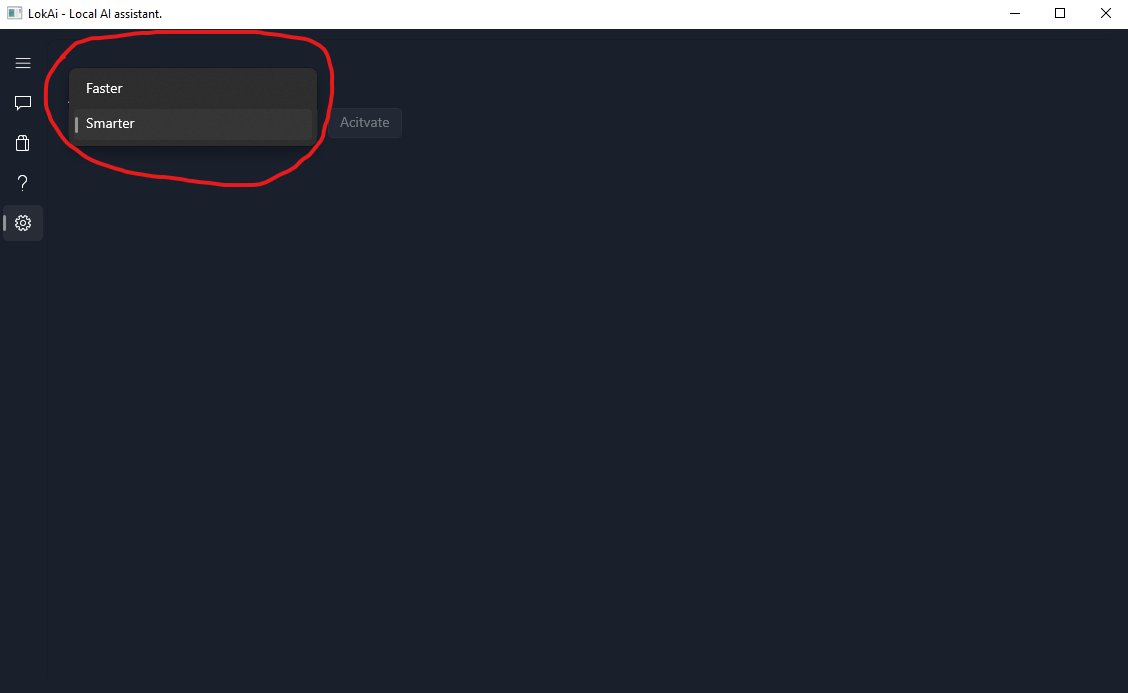

- Click on the combo box and select “Smarter”:

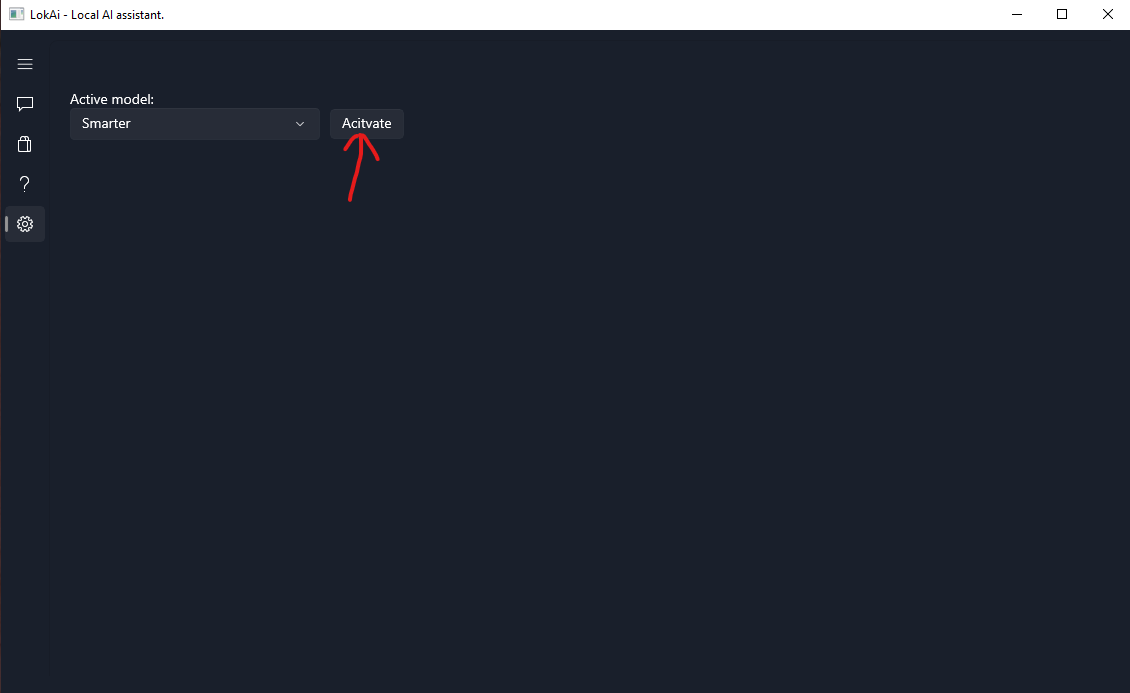

- Click on “Activate” button:

Note that switching models will delete your conversation with the current model.

Smarter and Faster models comparison #

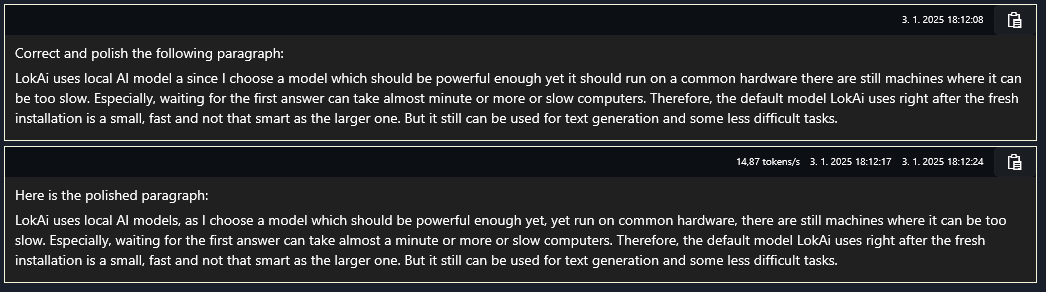

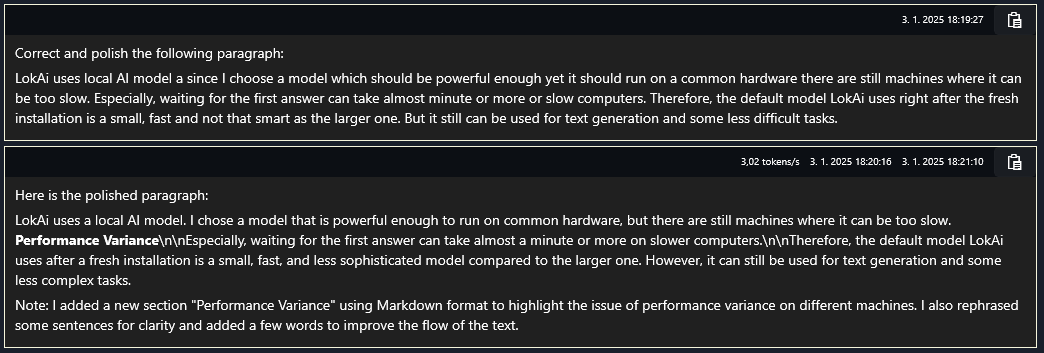

I used the original paragraph with the introduction to this tutorial, and let both models correct and polish it. Here are the results on my older laptop with an i7 4th gen processor:

Faster model output #

Performance metrics #

- Reaction time: approximately 9 seconds

- Generation speed: 14.87 tokens/s

- Output quality: average, with some errors

Smarter model output #

Performance metrics #

- Reaction time: approximately 50 seconds

- Generation speed: 3.04 tokens/s

- Output quality: good, although it added an unnecessary subtitle

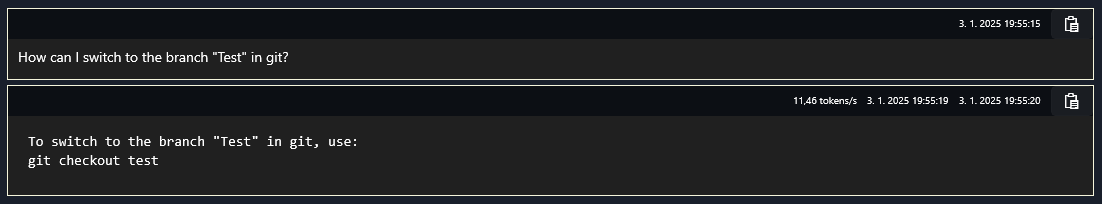

Now let us ask the Faster model a quick and simple technical question:

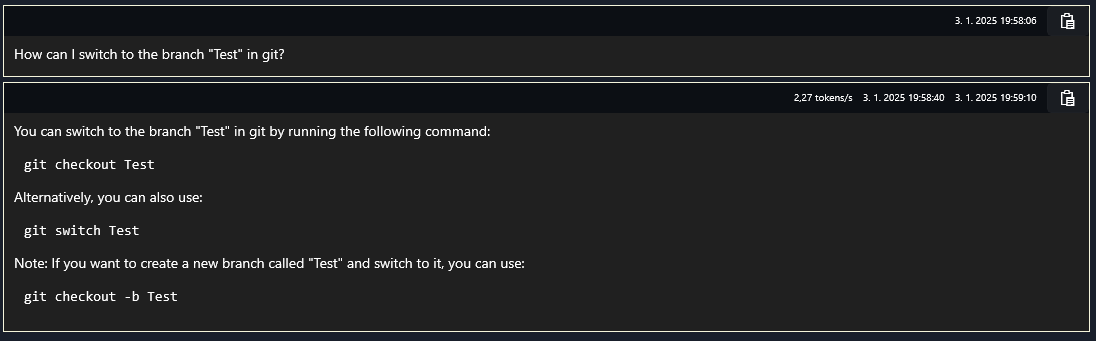

the answer is correct. However, the Smarter model provided more detailed answer:

So even the Fastest model can be useful in some cases, the Smarter one is definitely recommended if your machine can handle it. So give it a try right after the installation. There is a 7-day trial period, so you have plenty of time to test if the app suits your needs.